How Enterprise AI Outperforms Large Language Models for Marketing

Last Updated on

May 31, 2024

Published:

February 10, 2024

By Jayne Schultheis - Although the concept of "artificial intelligence" is at least as old as the Turing machine, which dates back to the 1930s, it became a household term only with the launch of ChatGPT in 2022. OpenAI made the ingenious move of giving this complex technology a simple, conversational user interface and presenting it free to all. That brought generative AI and large language models (LLMs) into focus for the general public.

The ease of use and the results of ChatGPT excited and fascinated the masses as well as business professionals. If you ask ChatGPT what it can be used for, the engine suggests the following activities: content generation, answering questions, text summarization, translations, programming and coding, educational support, idea generation, communication and dialog, text analysis, entertainment.*

Apparently ChatGPT's a know-it-all in the field of speech-related tasks. No wonder that marketers, whose business it is to develop stories and distribute them to audiences, have pounced on the free tool. In fact, 14% of respondents to a recent McKinsey study said their organizations now use generative AI for marketing and sales. They use AI tools like ChatGPT to write blog articles and social media posts, develop content strategies, create cover letters and more.

But the hype around the LLM as a magic bullet has died down a bit. A more refined application, enterprise AI, is gaining ground. The more content LLMs developed, the more obvious its weaknesses have become. Many companies now prohibit the use of ChatGPT for corporate purposes. A consensus is spreading that the supposedly "all-knowing" ChatGPT, its competitor Google Bard and others like them could seriously harm companies if used improperly.

Even in the AI industry, the realization has spread. The German magazine Der Spiegel published an article in 2023 declaring that "OpenAI will not dominate the AI era." According to Der Spiegel, Sam Altman, OpenAI CEO, believes that we have "reached the end of the era of these gigantic models." It's no wonder, then, that the trend is growing to develop smaller, specialized models — in other words, to offer "bespoke suits" rather than "off-the-rack" solutions.

One form of these "bespoke suits" are enterprise AIs, such as Rellify's Relliverse™. Before examining enterprise AI in depth, let's take a closer look at large language models as well as their weaknesses and the risks they can present.

What Is a Large Language Model?

ChatGPT can answer this question, too: A large language model (LLM) is an advanced type of artificial intelligence model designed to understand and generate human-like text in natural language. These models are based on deep neural networks and are trained on vast amounts of text data to perform various language-related tasks. One of the most well-known examples of a large language model is GPT-3 (Generative Pre-trained Transformer 3), developed by OpenAI. ... Large language models such as GPT-3 are prevalent in various fields, including machine translation, text summarization, text classification, chatbots, and even creative content generation. However, they are also the subject of ethical and societal discussions due to their text generation and manipulation capabilities, which can potentially be misused.*

So ChatGPT, using LLM, offers a relatively superficial statement about a matter that in reality is deeply complex. One thing is immediately apparent: LLMs' great weakness is triviality (the answer borders on the banal) while it also seems to have supreme self-confidence (the answer is simultaneously exhaustive).

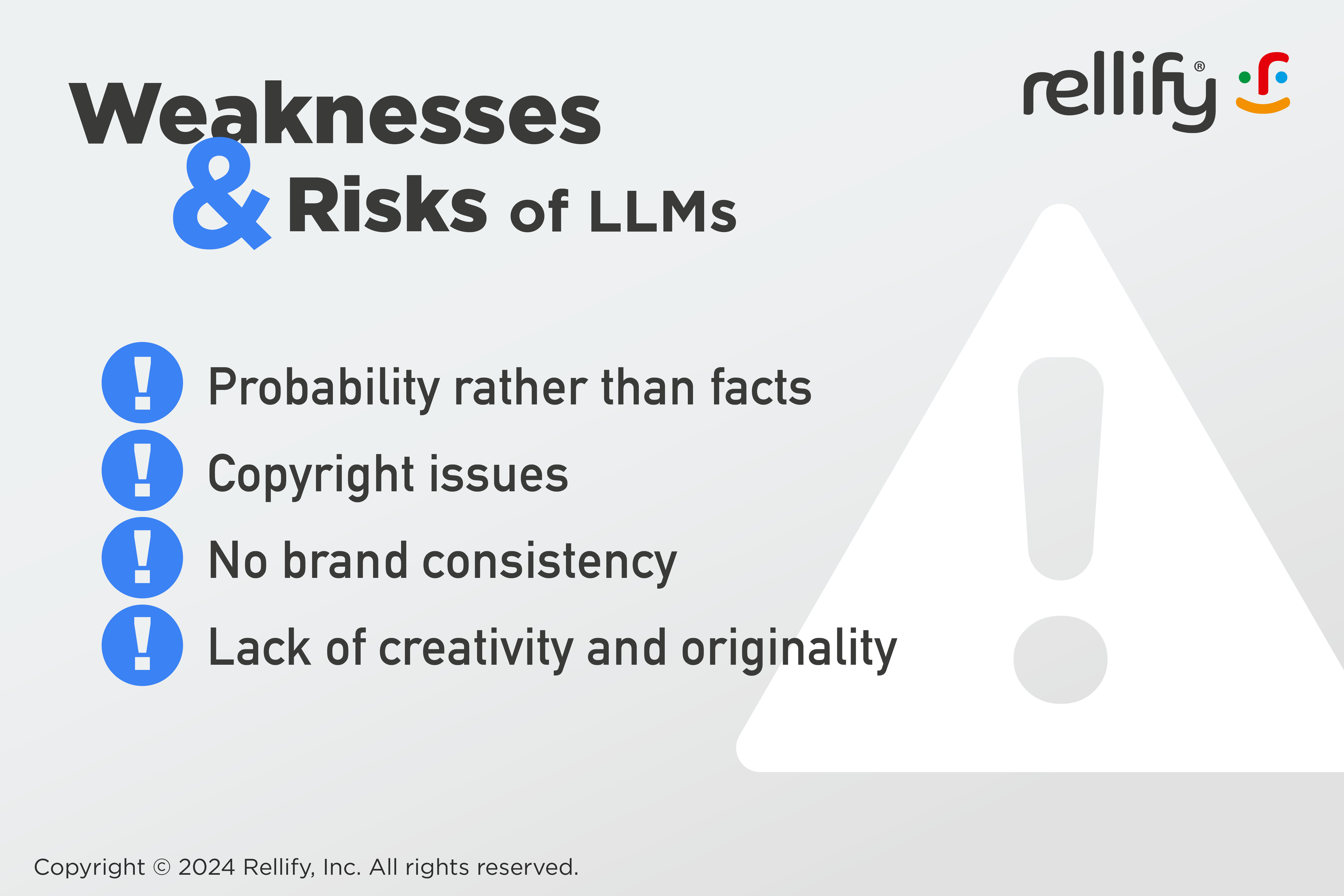

Weaknesses and Risks of LLMs

First, consider that LLMs have no understanding of language in the strict sense, nor do they have any notion of true and false. All the output of an LLM is merely the "most likely" string of words that would follow the prompt (the query or task) entered. The brilliance of the programmers who create these models and the vast amount of data that LLMs incorporate — 1 trillion parameters for ChatGPT-4 — can produce valuable results. They can give the impression that the machine somehow understands the prompt and responds to it within proper context and with a reliable degree of authority or authenticity. In fact, this is not the case. The process has its flaws.

Probability rather than facts

Put simply, an LLM responds to a query with a string of characters that, according to its analysis of billions of "events," would most often follow the input string (the query). In addition, the underlying data is typically not up to date. In the case of ChatGPT-3, the LLM considers data up to 2021, meaning that the engine has little to nothing to say about topics that appeared on the web after that date. ChatGPT-4 was upgraded to include more up-to-date information.

Granted, that doesn't mean the answers of an LLM are always wrong or only randomly correct. Quite the contrary: Due to the large amount of available data, they are absolutely correct in perhaps the majority of cases. However, the issue that makes companies quite uncomfortable is that they do not know when the machine is right and when it is wrong. In principle, it is necessary to check every statement.

Another issue is that the machine inevitably adopts the tendencies, or leanings, of the material on which it's based. Thus, it may well happen that ChatGPT returns a politically incorrect statement because there is predominantly politically incorrect content in its dataset on this topic. This can quickly bring trouble to companies if they publish such statements.

Copyright issues

Another concern relates to intellectual property. What if ChatGPT provides content that is copyrighted? That exposes the user to the risk of legal disputes — and dozens are already pending against OpenAI and other LLM-providers in the U.S.

No brand consistency

LLMs do not recognize the values and other attributes of the organizations using them and consequently cannot reflect them in brand-specific content.

Lack of creativity and originality

Last but not least, Large Language Models are structurally not creative. ChatGPT says of itself that it would be well suited for idea generation. But the ideas it presents are merely a systematic rehash of existing approaches in its database and therefore not very original. For example, if you ask ChatGPT what to write about on a particular topic, you'll receive very generic suggestions that you likely could have thought of yourself.

In short: Companies can't simply create content with ChatGPT and then use it unchecked. At the very least, companies must define a quality assurance process and act on it. As reliance on AI grows, human discernment and editorial diligence will become more important than ever.

Ways out of the LLM Problem

If you realize that as a company you can't blindly use ChatGPT and other LLMs in many applications, you're left with three strategic options:

"Pimp my ride"

Companies use refined tools to create prompts for LLMs. This way, they get better results that can also reflect their brand language, but the quality of the results remains dependent on those closed LLMs and the constant fine-tuning of their prompts. Also, they need a dedicated prompt engineer, to be funded by their budget.

Do it yourself

Companies develop their own in-house AI, i.e. with open-source models. If they buy and analyze the right data, it will be more relevant and the corresponding responses should be more accurate. Of course to do this they need a professional AI department, a resilient budget, and the ability to manage a large IT project and lead it to success. For most companies, this is simply not feasible.

Enterprise AI (AI-as-a-Service)

Enterprises purchase a proprietary, topic-specific AI developed especially for them, such as Rellify's Relliverse™. Based on well-defined, relevant and always up-to-date data, customers acquire an exclusive, bespoke solution, with full access and control.

What Differentiates an Enterprise AI From an LLM?

The most important difference between a topic-specific AI like the Relliverse™ and the big, closed LLMs like ChatGPT stems from the underlying data. Unlike the catch-all generic-data mishmash of the big LLMs, the data for a Relliverse™ is curated individually for each customer using crawlers and machine learning. The system crawls the URLs of the client company and its competitors and uses its patent-pending deep machine learning to analyze vast amounts of related documents.

This identifies tens of thousands of keywords and phrases, as well as millions of connections between them. All relevant search terms are then assigned. Then the AI clusters the data by main topics, level-1 subtopics, level-2 subtopics and level-3 subtopics, taking into account millions of data points and conducting a depth of analysis that no human team could possibly do.

The results of this process are made available in the browser-based Rellify Content Intelligence application. There you can see at a glance all the topics the AI analysis deems of strategic importance and which, if processed appropriately, will help secure topic authority and generate organic traffic. The creation of content based on the content intelligence provided by the Relliverse™ then takes place within the same application in a continuous process guided and supported by AI.

Advantages of an Enterprise AI-as-a-Service

The advantage of a Relliverse™ lies in the underlying data. Since the system is based exclusively on content that is strategically relevant to the topics of the commissioning company, a true subject-matter expert AI is created. Its users can understand their own content and that of their competitors like never before. They also can see their content market shares and visibility at a glance and always know what to write about and why — without having to perform constant tedious analyses.

In addition, AI helps in the guided writing process of the Content Intelligence application. In the first step, users can see at a glance the strategic topics to pursue based on the findings of the Relliverse™. Once a topic has been selected, the editor or writer can manually compose the article structure using easy, intuitive drag-and-drop functionality. Or, with one click, they can let the AI develop an article outline based on the selected topic and the assigned keywords.

When writing the article itself, the author has the option to have the entire article created automatically or have one or more paragraph(s) auto-generated for each heading or subhead. This is where another critical competitive advantage of a Relliverse™ becomes apparent: In the automated AI content generation, the LLM behind it (currently GPT-4) gets fed with prompts for which the entire brief — content structure, keywords, questions, etc. — is taken into account. This means that the LLM receives a customer-specific optimized prompt with the exact relevant inputs for the text to be generated. Rellify's approach guarantees maximum quality of its output.

To stick with the analogy used at the beginning, it can also be said that a proprietary enterprise AI-as-a-service is a perfect fit —like a bespoke suit. It reflects the client's personality and represents the organization with a level of authority and professionalism that evokes confidence within the market. Put simply, it makes the wearer look better overall than rivals that use an off-the-rack LLM. And that's a real competitive advantage in a marketplace where large language models are churning out content at a record pace, all vying for the same readers.

*text is quoted from ChatGPT